Stacey Rolland

The following presentation was made before the Responsible AI for Data Science: Operationalising AI Ethics global conference presented by AI Governance International and AI Guild on November 19, 2020. The live presentation was abbreviated for time.

SUMMARY: After the recent election here in the United States, questions abound regarding what the incoming Biden-Harris Administration means to emerging technologies and responsible innovation. In this presentation, I take a closer look at where the United States currently stands and discuss many of the very real sticking points that obscure the path forward for encouraging and regulating responsible innovation in the U.S.

Dynamic-Changing Election

Unless you’ve been consciously avoiding it, which is perfectly understandable, the U.S. just went through a tumultuous election, with Joe Biden challenging the incumbent, Donald Trump. We can say definitively that Joe Biden will be the next U.S. President and the dynamic in the federal government will change. But how much?

Naturally, people are trying to read the tea leaves for what the Biden Administration will bring for tech, pointing to comments President-elect Joe Biden has made in favor of revoking Section 230 of the Communications Decency Act that shields internet companies from liability for the content they host. They point to Vice President-Elect Kamala Harris’ statements as a Senator calling for regulations that ensure consumer privacy is protected by tech companies. In 2018, she joined several other Senators calling for federal guidelines against algorithmic bias based on race, gender, and other characteristics.

Rather than read the tea leaves, I’d like to take the time today to take a closer look at where the United States currently stands and discuss many of the very real sticking points that obscure the path forward for encouraging and regulating responsible innovation in the U.S.

Setting the Stage

Legislators and regulators around the globe are seeking to strike the right balance between encouraging innovation and protecting the rights of their citizens. This is a slow, complicated, and ever-developing process. In contrast to the slow progress of government, businesses are quickly deploying rapidly-changing technologies.

Some businesses embrace the wide berth they’re provided by limited government scrutiny, while others are seeking greater guidance and clarity. It is important that those businesses, and the businesses NOT thinking about consumer protections, take some proactive steps to ensure their internal policies and operations can adapt to regulatory actions when they eventually occur. As such, businesses need to understand the current regulatory landscape and potential paths forward in responsible innovation. Let’s take a look at that now.

US Lagging

An honest assessment of where the U.S. currently stands in regulating responsible emerging technologies can fairly be called “lagging.”

Obama Administration

The first major foray into the federal government’s role in AI came in the final months of the Obama Administration with an initial report outlining recommendations related to AI regulations, security, ethics, and fairness. The Obama Administration also released a strategic plan for providing publicly-funded research and development of AI technologies and a report analyzing the economic and societal effects of automation to better determine the appropriate balance between the benefits and costs of AI technologies. A few months after the release of these reports, the Trump Administration began.

Trump Administration

The Trump Administration position on regulating emerging technologies has been to establish a hands-off approach and emphasize a standards-driven model (as opposed to a regulatory model). They emphasize tipping the scales in favor of advancing AI innovation, while regulating in limited areas and only when necessary. In essence, minimal government regulation and maximum business growth.

In February 2019, President Trump signed an executive order entitled “Accelerating America’s Leadership in Artificial Intelligence.” It establishes a U.S. policy of encouraging AI development and innovation in the United States and calls for standards of international engagement. It makes clear that the Administration believes AI innovation is key to economic growth. It also suggests that agencies should not encumber innovation with regulations.

This past January, the Trump Administration built upon the executive order by releasing for public comment draft rules that agencies must follow before considering regulation of AI. Final rules are expected to be released before President Trump leaves office. This means that, before an agency contemplates issuing federal guidance in any area of AI, be it around the use of data in targeted advertising or the use of algorithms in criminal sentencing or the controls around autonomous vehicles, the agency would first need to consult these rules on determining whether regulations are truly necessary.

The draft rules propose agencies consider the following principles when deciding whether to regulate AI. I’ll comment on a few of them.

- Public Trust in AI: Any regulation must encourage reliable, robust, and trustworthy AI.

- Public Participation: Agencies should use the existing mechanisms of notice and comment to allow the public to participate in the development of rules.

- Scientific Integrity and Information Quality: Agencies must use quality scientific research when drafting regulations and must be transparent about the research upon which they are relying.

- Risk Assessment and Management: Agencies must use a risk-based approach. This approach assumes there will be failures and some risk is acceptable in the course of innovation. Agencies should consider the degree and nature of the risks and avoid “unnecessarily precautionary approaches that could unjustifiably inhibit innovation.”

- Benefits and Costs: Agencies should only promulgate regulations if the intended benefit of the regulation outweighs their costs.

- Flexibility: Regulations must be flexible in order to allow for rapid innovations.

- Fairness and Non-Discrimination: Agencies must ensure fairness and non-discrimination in accordance with existing laws. They should determine unintended discrimination in the context of existing processes.

- Disclosure and Transparency: Agencies should consider the sufficiency of existing regulations before contemplating new measures. What constitutes appropriate disclosure and transparency is context-specific and depends on the balance of the benefits and costs of the regulation.

- Safety and Security: Agencies should consider controls over confidentiality, integrity, availability of data, system resilience and cybersecurity risk.

- Interagency Coordination: Agencies should ensure consistency and predictability between regulations across the government.

The Biden Administration likely will take a different approach to these rules, so it’s unclear what effect on agencies these final rules will have in the remaining weeks of the Trump Administration. It remains to be seen whether the Biden Administration will scrap these rules for a different tack or whether they will merely alter the details but keep the Trump Administration’s model of providing guidance to agencies on what factors to weigh when considering rulemaking in emerging technologies.

Congress

Given the ubiquity of data collection and usage across all industries and the rapid deployment of emerging technologies in every sector of the economy, it is surprising how little traction legislation on emerging technology has received in Congress. Limited Congressional attention has tended to focus on facial recognition, biometrics, and autonomous vehicles. However, a few bills introduced in this Congress (soon to adjourn) are worth noting for their stake in the ground.

The Algorithmic Accountability Act, introduced in both the House and Senate, is the first federal bill to regulate AI across industries. It would direct the FTC to require companies to perform regular impact assessments on high-risk automated decision systems to measure accuracy, fairness, bias, discrimination, privacy, and security. These impact assessments should be performed with external third parties, such as independent auditors and technology experts when possible.

Federal Regulatory Agencies

Several bills introduced would create separate initiatives, committees, or Centers of Excellence within the federal government to advise on AI innovation; some of these bills speak to advancing responsible principles. For example, the Artificial Intelligence Initiative Act would establish a National AI Research and Development Initiative that would, among other things, seek to identify and minimize inappropriate bias in dataset algorithms and to call for research into algorithm accountability and the societal and ethical implications of AI. None of these measures would bring actual rulemaking authority.

Without new legislative authorities, federal agencies seeking to provide guardrails and guidance to businesses for responsible AI can either issue standards for consideration or depending on existing authorities. This doesn’t mean there haven’t been opportunities for creativity within federal agencies.

Standards

The National Institute of Standards and Technology (NIST), within the U.S. Department of Commerce, has provided a valuable starting point for national standards for responsible AI.. For example, their Plan for Federal Engagement in Developing Technical Standards lays out nine standards for AI safety, risk management, explainability and security, along with societal and ethical considerations, governance, and privacy.

Some agencies have begun contemplating standards for their covered sectors in areas like data rights and data management, especially in the fintech space.

Existing Authorities

U.S regulators are deeply aware that the business sector is in need of guardrails and guidance. With no new legislation in this area, regulatory agencies are depending on their current existing authorities, such as the FTC Section 5 and UDAAP from the Dodd-Frank Act – provisions that focus on unfair and deceptive business practices.

Depending on existing regs means that traditionally highly-regulated industries like financial services and healthcare will be subject to more scrutiny as regulators stretch their authorities to emerging technologies. I believe that other industries can look to financial services, in particular, to glean lessons and best practices in the coming years as to what regulatory scrutiny might look like in their respective industries, especially as it pertains to data usage, data access, and protections.

Regulators are not just looking at their current authorities, nearly every agency has set up Offices of Innovation that are both outwardly and inwardly focused. These special offices are actively engaging with businesses to learn about how businesses are using emerging technologies. They are setting up “sandboxes” to allow businesses to work outside traditional regulatory bounds, but under the supervision of agencies in controlled environments, to experiment with novel innovations. In addition, these Offices of Innovation are applying lessons learned from the business sector to their own applications of emerging technology, or RegTech, to better serve their oversight and rulemaking mandates. Businesses are well-served to actively engage with their regulators and take advantage of these opportunities to try out new technologies in these sandboxes, where available.

States

Filling the vacuum left by the lack of federal legislation and new regulations, individual states have taken the lead in advancing laws around responsible innovation. Arguably the most significant state advancement has been California’s data privacy regime. The election on November 3rd not only brought a new Presidential Administration, it also brought about enhancements to the California regime. I won’t go through the details of CCPA or CPRA, but I will highlight that it established a dedicated regulatory agency for the protection of consumer privacy within California. It will be interesting in the coming years how the California model of consolidating oversight of these issues within one agency impacts debates in the federal government about the appropriate regulatory body for these data issues that cross all industries and sectors.

California is not the only state making advances in the areas of responsible innovation. For example, bills relating to data privacy were considered in at least 30 states and Puerto Rico in 2020, though only a small handful of sector-specific measures actually became law. The coming years are expected to bring more legislation in these areas.

The potential for states to advance in different directions sets up a collision course with the federal government, when it does eventually act. States will have set up separate and possibly conflicting regulatory regimes. How will federal enforcement overlay atop the separate states?

This is a major sticking point that will prove difficult to resolve, so let’s dive deep into this and other current sticking points in the advancement of responsible innovation.

Sticking Points to Watch

Legislative Sticking Points

We can look to federal privacy legislation to glean lessons regarding future legislative action around emerging technologies. Here are a few sticking points in privacy legislation that one can easily see being replicated in the emerging technology/AI space.

Federal Law Preemption of State Law

Those of you monitoring the two major pieces of federal consumer data privacy legislation in the U.S. Senate are no doubt familiar with the challenges the federal government will have interacting with existing state laws. Under the concept of preemption, federal law would supersede state law. Industry generally favors broad preemption, which would harmonize the patchwork of state laws and provide greater compliance certainty. Privacy and consumer protection advocates support stricter privacy laws, which tend to be in the states.

While the policy approaches are remarkably similar between the two major privacy bills in the Senate, they split along the preemption fault line. The Republican bill includes an express preemption provision, providing certainty and compliance relief to industry, while the Democratic bill seeks to allow states to provide further consumer protections for their citizens.

Private Right of Action

A second major sticking point is whether individual consumers should be provided with a private cause of action to sue a company for damages in the situation of a violation of their privacy rights. The Democratic bill would provide a private right of action, while the Republic bill would allow redress through the FTC and state attorneys general.

Preemption and private right of action are not only sticking points for privacy legislation. If states continue to legislate in areas of responsible innovation and the federal government eventually begins considering national-level legislation in these areas, the issues of preemption and private right of action no doubt will be debated again and again. For instance, early debates in responsible tech have already begun with the Algorithmic Accountability Act, which would not preempt state law and does not provide a private right of action. Instead, the FTC would enforce under their deceptive and unfair acts and practices and state attorneys general can bring civil suits.

Dedicated Data Protection Agency vs. Sectoral Approach

Another interesting question is who should regulate responsible innovation. Under our current sectoral approach, individual agencies depend on their existing authorities to provide guidance to their covered industries. There have been increasing calls for a separate dedicated data protection agency, which would provide targeted oversight of AI and emerging technologies. At least one major bill was introduced in Congress to create a dedicated agency. And, as I mentioned, California has gone in that direction.

The Biden-Harris campaign has not commented on these and many other emerging technology / responsible innovation issues. And that is because of our next sticking point: The COVID-19 pandemic and its impact on the U.S. economy.

Political Sticking Points

Coronavirus and Economic Recovery Priorities

Obviously, the COVID-19 pandemic is top of mind in the United States, especially as we head into what promises to be a very tough winter of disease spread. The U.S. economy has been gored by the pandemic and the incoming Biden Administration will be focused on limiting the spread of the virus and on economic response and recovery.

The first years of the Biden Administration, if not the first term, no doubt will be dedicated to COVID-19 response and the pandemic aftermath, specifically in the areas of economic recovery, caregiving, and racial equity. However, it is worth noting that privacy and technology play different key roles in these areas. Just to name a few examples:

COVID-19: Health care data privacy, contact tracing, data portability, children’s privacy in remote learning.

Economy: Technological innovation will be at the forefront of future economic growth in the U.S., fintech advances in alternative credit, discriminatory algorithms in credit underwriting.

Racial Unrest and Equity: Facial recognition and biometric data collection in law enforcement and discrimination in uses that span from educational exam proctoring to smartphone apps, discriminatory algorithms in hiring, predictive policing.

So, it is not necessarily true that status quo will exist untouched for the next four years. There are plenty of opportunities for meaningful legislative action in responsible innovation.

Divided Congress: Republican Senate / Democratic House

Right now, there are 50 Senators who caucus with Republicans and 48 Senators who caucus with the Democrats – with two seats still to be determined. We won’t know which party controls the Senate until after run-off elections for those two Senate seats (both in Georgia) on January 5th. So, it is possible the Democrats will have control of the Senate, House, and White House beginning in January. If that does happen, the Democratic majority will be razor thin, which doesn’t necessarily make passage of President Biden’s priorities particularly easy.

If the Senate remains under Republican control, there is still room for bipartisan negotiation. President-elect Biden has a history of successfully negotiating tough legislation with Republican Majority Leader Mitch McConnell. What’s more, many responsible technology issues are actually bipartisan, as we see with consumer data privacy. Common ground can be found in many technology issues. If Congress is looking for bipartisan issues to show they can advance any legislation, they can find them in technology policy.

Why would the Republican Senate look for bipartisan issues? Under Majority Leader McConnell’s leadership, the Senate spent much of this Congress confirming President Trump’s nominated conservative judges to lifetime appointments. Under President Biden, Leader McConnell is unlikely to be as generous in allowing votes on less conservative judges nominated by the Democratic president. Therefore, there is potential for a need to show some legislative action for Senators up for reelection to taught on the campaign trail in two years.

Given these dynamics, we probably won’t see advances in dedicated major sweeping responsible technology legislation in the next four years. Pandemic response and economic recovery measures likely will burn through significant amounts of political capital on both sides. Barring some major catastrophe in AI which requires Congressional action, regulatory agencies will continue to depend on their existing authorities to provide clarity on responsible innovation.

Regulatory Sticking Points

Limits of Existing Authority

Without new legislation to expand existing authorities or to grant new authorities to regulators, regulatory agencies concerned about providing guidance to the development and deployment of emerging technologies must depend on interpretations of existing authorities. We are likely to see a continuation of these regulators using existing authorities to provide guidance to their covered businesses in these new technological areas. Many of their existing authorities are technologically agnostic, so they are able to adjust as technologies advance.

However, outside industries that are already heavily-regulated, or within agencies whose current authorities can’t reasonably be stretched to capture rapidly-evolving emerging technologies, regulators have limited reach to provide guidance for uncertain businesses or guardrails for consumer protections.

Taxonomy / Common Language

As with any nascent industry, the process of establishing common terminologies can be bumpy. This is an issue not only in the United States but across the globe. While looking at the same technologies, different countries are advancing policies that use different terms and definitions, expounding the difficulty for global businesses to reconcile requirements and design adequate compliance measures.

One of the first projects regulators will need to tackle is establishing terminology and taxonomy across agencies and attempting to reconcile differences in terms and definitions across states and other countries. Business and advocates can play a key role right out of the gate in educating regulators on their technologies and helping to clarify the scope of definitions.

Striking Right Balance Between Innovation and Enforcement

Regulators will be conscious of the balance between fostering an environment of innovation for businesses while also protecting the rights and interests of people. As we discussed earlier, we’re expecting the Trump Administration to soon release their final rules regarding what factors agencies must follow before considering whether to promulgate regulations in AI and emerging technologies. And, as we’ve seen, the current balance is tilted far in the favor of unregulated innovation.

As I mentioned earlier, it isn’t hard to guess that the Biden Administration would tilt that balance back toward the middle by emphasizing consumer protections, data rights, and anti-discrimination and anti-bias controls. It will remain an open question what form those enforcements might take.

Role of Federal Government as Enforcer

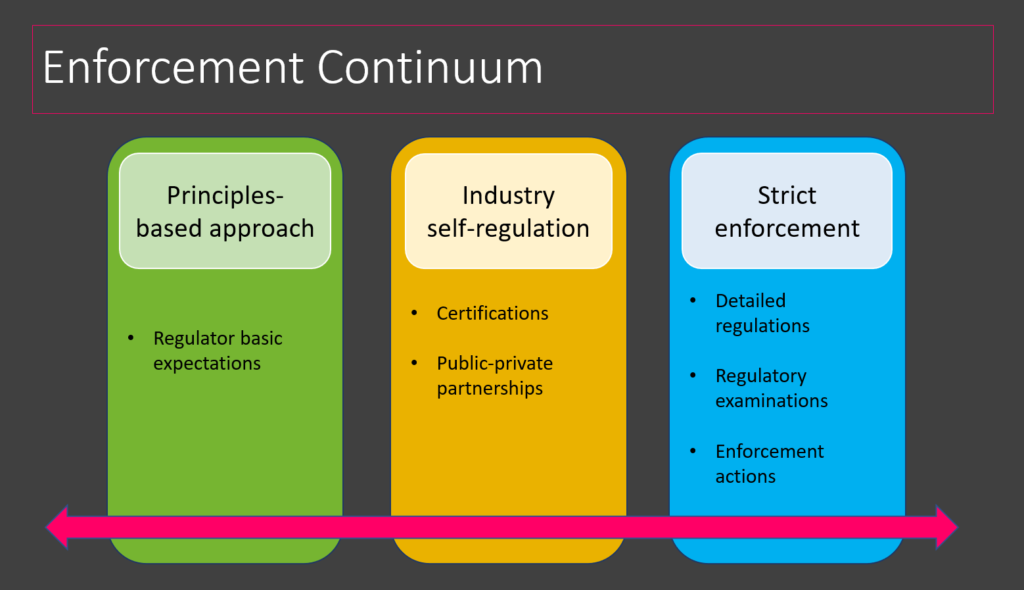

There is a continuum of methods and approaches federal agencies can take in regard to the strength of force of regulatory requirements.

In the early stages of an industry, a principles-based approach makes a lot of sense. Regulators can establish basic expectations and then keep an eye on the industry as it develops. Coming out early with strict enforcement measures might not capture the direction in which the industry develops, especially in an area as rapidly evolving as AI. Of course, a principles-based approach provides some level of clarity for businesses but no teeth for consumer protections. And, as we’ve already mentioned several times, some existing areas of strict enforcement can absorb new technologies.

Under a principles-based regulatory framework, if consumer demands for greater protections increase, and businesses continue to call for more certainty in how they should approach designing responsible principles into their technologies, industry self-regulation regimes and third-party certifications could play a key role. In this regard, third party organizations essentially step into the role of regulator. We can think of this type of regime as a public/private partnership. A third-party organization builds upon federal standards and establishes a certification program. Businesses can rely on their industry certification under future enhanced regulatory scrutiny and customers can gain an increased level of trust in an unregulated market.

As AI grows, expands, and matures, surely federal agencies will begin to move more in the direction of strict enforcement. In that case, if a strong public/private partnership exists, future regulations will be built upon existing industry accountability programs.

State Action Could Force Federal Action

Data privacy provides an interesting real-time example of the factors that drive federal action. As we know, California has passed significant data privacy laws. Other states are expected to follow suit. Since each state acts independently, the laws are at times conflicting or not easily married. As we just discussed, this patchwork of different requirements sets up a compliance nightmare for companies that do business in multiple states. Many experts believe federal action will be forced when enough states enact their own data privacy laws, which could happen as early as the next two years.

There have been suggestions that, in a post-Schrems II environment, California potentially could apply for an adequacy decision in cross-border data flows with the EU, while the rest of the United States could not. The sticking point is, of course, California does not have control over federal intelligence collection processes, which underlies the Schrems decision in the first place. However, that it would even be suggested that individual states could have different diplomatic relationships with other countries, separate from the United States federal government, is an interesting dynamic to keep an eye on.

Federal Funding

While the Trump Administration’s executive order established American leadership in AI to be the second highest research and development priority after national security for 2020, agencies were tasked to shift existing funding to these new priorities.

The two main paths to funding are more difficult in a divided Congress. One path is through new legislation, which would authorize additional funding to innovative new programs and requirements. But, with limited movement on significant new legislation expected in the coming years to provide new funding, agencies will need to be creative under not only existing authorities but also existing levels of funding.

The second path would be through additional funding provided within annual budget appropriations bills, in which Congress specifically designates a portion of funding for specific areas within agencies. In a divided Congress, passage of appropriations bills also becomes more difficult, as the Democratic majority in the House and the Republican majority in the Senate can’t find agreement between their two funding bills. As a result, we’ve seen “continuing resolutions” repeatedly passed, in which the federal government continues to function based on temporarily extending existing levels of funding. (As an aside, it is usually when this “continuing resolution” fails to pass that the federal government shuts down until agreement can be reached).

So, while AI has been established as a priority in American R&D, and will most certainly play a significant role in the future American and global economy, meaningful federal funding for advancing both innovation and guidance could be a challenge in the United States.

Predictions

Taking all of these sticking points into consideration, let’s get to the fun part and make some predictions.

- Federal agencies will provide increasingly-detailed guidance from a standards-based approach and solicit public comments on the appropriate role of federal regulation of emerging technologies in different sectors. These actions will lay the foundation of future regulatory action. The Biden Administration could seek to unify approaches across the vast web of federal agencies.

- Regulatory scrutiny into responsible innovation will begin with questions based on existing regulations on unfair and deceptive acts or practices, such as Section 5 of the FTC Act and UDAAP under the Dodd-Frank Act.

- Third party organizations will grow in different sectors, providing business best practices and certifications. They also will play key role in reconciling terminologies and taxonomies.

- Detailed impact assessments that show how business are thinking about and controlling for unintended consequences and mitigating risk will be a key standard across industries. That bills like the Algorithmic Accountability Act require third party organizations and industry experts participate in these assessments will further formalize them across industries.

- Increasing numbers of states will advance detailed laws in different areas of AI and responsible innovation, creating a patchwork system that complicates compliance for businesses.

- California’s dedicated data privacy agency will serve as an interesting experiment that will reveal best practices and lessons learned for the federal government.

- Meanwhile, the American public will continue to become more aware of debates around data rights and algorithmic risk – which increasingly will put pressure on companies to operationalize these responsible principles. Business will increasingly seek clarity and compliance relief from the federal government.

Conclusion

The sticking points we discussed here highlight the importance of conferences like this one. Companies seeking clarity on how best to put responsible innovation principles into action are ultimately looking to build trust with their customers. Sharing best practices and industry methodologies will allow companies to set themselves up to better respond to and comply with regulatory expectations in whatever form it comes.