Stacey Rolland

Lessons from a Basement Renovation

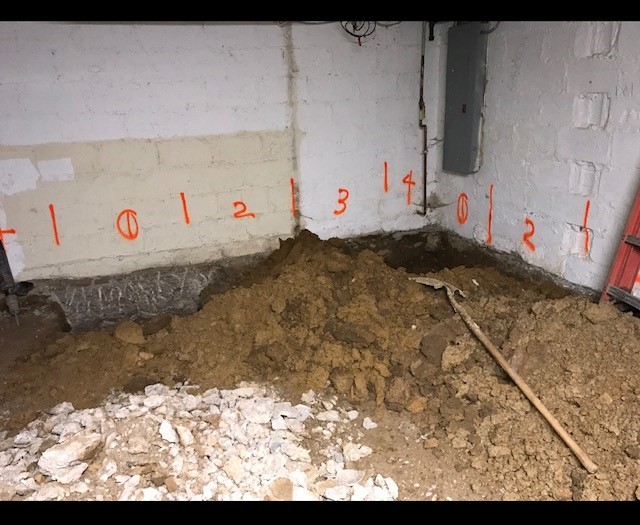

My husband and I purchased an older home several years ago. In order to bring the house up to modern standards, we hired a contractor to dig out and finish the basement. To reinforce the foundation as the ground was removed, the contractor carefully and methodically added underpinning to the foundation walls. Without that underpinning, the entire house could have collapsed.

Adding underpinning to the foundation of an existing house is difficult, tedious, expensive, and endangers the core structure of the house. By comparison, when a new house is built, the underpinning is part of the foundation as it is laid, which ensures the house is structurally sound into the future. Obviously, it is less than ideal to underpin a house after the foundation is set and the house is built.

In the U.S., both the public and private sectors are in the early stages of rapidly laying the foundations of AI systems. Few regulatory guidelines exist to ethically shore up the foundations of AI to ensure privacy protections and to prevent bias, discrimination, and negative consequences. If AI is rapidly designed and deployed without ethical underpinnings, catastrophic social, political, and economic consequences could result.

Race for Global Dominance

The United States’ technological race with China is one of the major drivers of this acceleration of AI deployment. China’s “advantage” in the AI race is their lack of regulation to restrain unethical use of data and AI deployment that violate human rights. As we see with physical structures built without regulatory guidelines, weak foundations can only hold for so long before catastrophic consequences bring structures down.

The Trump Administration supports an aggressive race for U.S. global dominance in AI. In 2019, the President signed an Executive Order on Maintaining American Leadership in Artificial Intelligence which introduced six strategic Federal objectives to promote and protect American advancements in AI (1. investment in R&D public/private collaboration; 2. enhancement of Federal data, models, and computing resources; 3. reducing barriers; 4. ensuring security standards; 5. workforce training; 6. action plan to protect US advantage again strategic competitors and foreign adversaries). The Trump Administration followed by launching https://www.whitehouse.gov/ai/. The White House in January 2020 proposed guidance for the regulation of AI applications, which will “help Federal regulatory agencies develop and maintain approaches for the safe and trustworthy creation and adoption of new AI technologies without stifling innovation.” According to the guidance, “Promoting innovation and growth of AI is a high priority of the United States government.” Upon closer inspection, the guidance stresses agencies “avoid a precautionary approach” while taking a risk-based approach that focuses on “the effect of the potential regulation on AI innovation and growth.” It appears the preliminary guidance for regulation of AI applications weighs heavily toward laying a fast AI foundation with limited ethical underpinning.

If Vice President Joe Biden enters the White House in 2021, his administration will inherit the global AI race from President Trump. Senator Kamala Harris would be uniquely positioned to take a lead role as Vice President in ensuring AI is built upon an ethical and responsible foundation. As California Attorney General, she introduced the OpenJustice data project, which combines California Department of Justice statistical data with other public datasets to encourage transparency and allow experts to analyze law enforcement interactions in communities. As Senator, she called for regulations that ensure consumer privacy is protected by tech companies. In 2018, she joined several other Senators in sending a series of letters to federal agencies calling for guidelines against algorithmic bias based on race, gender, and other characteristics. She was a sponsor of the AI in Government Act of 2018 and 2019 to direct a cohesive federal AI policy through the General Services Administration (GSA), which would include “best practices for identifying, assessing, and mitigating any discriminatory impact or bias” or “any unintended consequence of the use of artificial intelligence by the federal government.” The bill also would add to the ethical underpinning of AI deployment within the federal government by creating a Center of Excellence within GSA to assist federal agencies in these efforts. In April 2019, Harris expressed concern about building AI applications with inclusions of bias within their foundation, stating, “When we look also at these emerging fields and look at issues like AI – artificial intelligence and machine learning – there is a real need to be very concerned about how [racial bias is] being built into it.”

No matter who the next U.S. President might be, we can’t ultimately win the race for sustainable AI if American ideals are sacrificed and if technological advancement proceeds without adequate ethical protections. Usually, in the months before a major election, policy-making activities in Federal agencies and in Congress come to a standstill. This means that momentum will slow on potential ethical guidance while unrestrained AI design and deployment will advance at full speed.

What Happens Next is Critical to AI Structural Integrity

After the 2020 Election dust settles, the government will need to catch up. Congress must set an agenda for AI innovation that includes a strong focus on ethical underpinnings. Federal agencies should use their existing regulatory powers to establish clear and actionable guidelines to identify, assess, and mitigate bias, discrimination, and unintended consequences.

In the meantime, we need a national debate about what rights and protections Americans expect in the ethical deployment of AI in the public and private sectors. While we have enough to worry about right now, a lack of ethical underpinning to shore up AI will compound existing injustices and create future civil rights crises. Americans need to become better educated about the current uses of their personal data and potential consequences of under-regulated AI. The public and private sectors must be more transparent in explaining their current activities and their AI goals. Transparency, explainability, and informed engagement will help advance the right policies to build ethics into AI design in America.

Laying an AI foundation without ethical underpinning, but marred by potential inclusions of bias and discrimination, will threaten the soundness of AI in America. The foundations of AI applications are being laid more rapidly every day, while the ethical underpinning of clear and actionable regulatory guidelines lag behind. Strong ethical protections without unreasonably constraining the development of innovative technology must be included within our AI foundation. As with basement construction, it will be difficult to underpin protections into AI once the foundation has been laid and the house has been built.